AI consumability

We have various approaches for making our content visible to AI as well as making sure it's easily consumed in a plain-text format.

The primary proposal in this space is llms.txt ↗, offering a well-known path for a Markdown list of all your pages.

We have implemented llms.txt, llms-full.txt and also created per-page Markdown links as follows:

llms.txtllms-full.txt- We also provide a

llms-full.txtfile on a per-product basis, i.e/workers/llms-full.txt

- We also provide a

/$page/index.md- Add

/index.mdto the end of any page to get the Markdown version, i.e/style-guide/index.md

- Add

/markdown.zip- An export of all of our documentation in the aforementioned

index.mdformat.

- An export of all of our documentation in the aforementioned

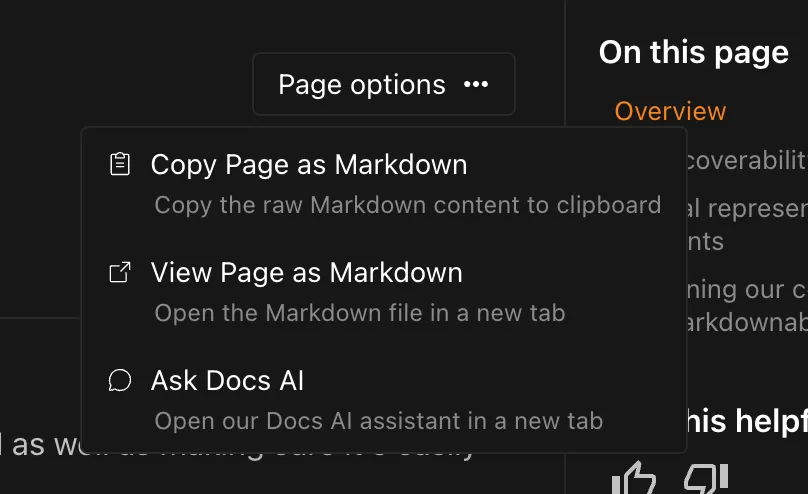

In the top right of this page, you will see a Page options button where you can copy the current page as Markdown that can be given to your LLM of choice.

HTML is easily parsed - after all, the browser has to parse it to decide how to render the page you're reading now - it tends to not be very portable. This limitation is especially painful in an AI context, because all the extra presentation information consumes additional tokens.

For example, given our Tabs, the panels are hidden until the tab itself is clicked:

If we run the resulting HTML from this component through a solution like turndown ↗:

- [One](#tab-panel-6)- [Two](#tab-panel-7)

One Content

Two ContentThe references to the panels id, usually handled by JavaScript, are visible but non-functional.

To solve this, we created a rehype plugin ↗ for:

- Removing non-content tags (

script,style,link, etc) via a tags allowlist ↗ - Transforming custom elements ↗ like

starlight-tabsinto standard unordered lists - Adapting our Expressive Code codeblocks HTML ↗ to the HTML that CommonMark expects ↗

Taking the Tabs example from the previous section and running it through our plugin will now give us a normal unordered list with the content properly associated with a given list item:

- One

One Content

- Two

Two ContentFor example, take a look at our Markdown test fixture (or any page by appending /index.md to the URL):

Most AI pricing is around input & output tokens and our approach greatly reduces the amount of input tokens required.

For example, let's take a look at the amount of tokens required for the Workers Get Started using OpenAI's tokenizer ↗:

- HTML: 15,229 tokens

- turndown: 3,401 tokens (4.48x less than HTML)

- index.md: 2,110 tokens (7.22x less than HTML)

When providing our content to AI, we can see a real-world ~7x saving in input tokens cost.

Was this helpful?

- Resources

- API

- New to Cloudflare?

- Products

- Sponsorships

- Open Source

- Support

- Help Center

- System Status

- Compliance

- GDPR

- Company

- cloudflare.com

- Our team

- Careers

- 2025 Cloudflare, Inc.

- Privacy Policy

- Terms of Use

- Report Security Issues

- Trademark